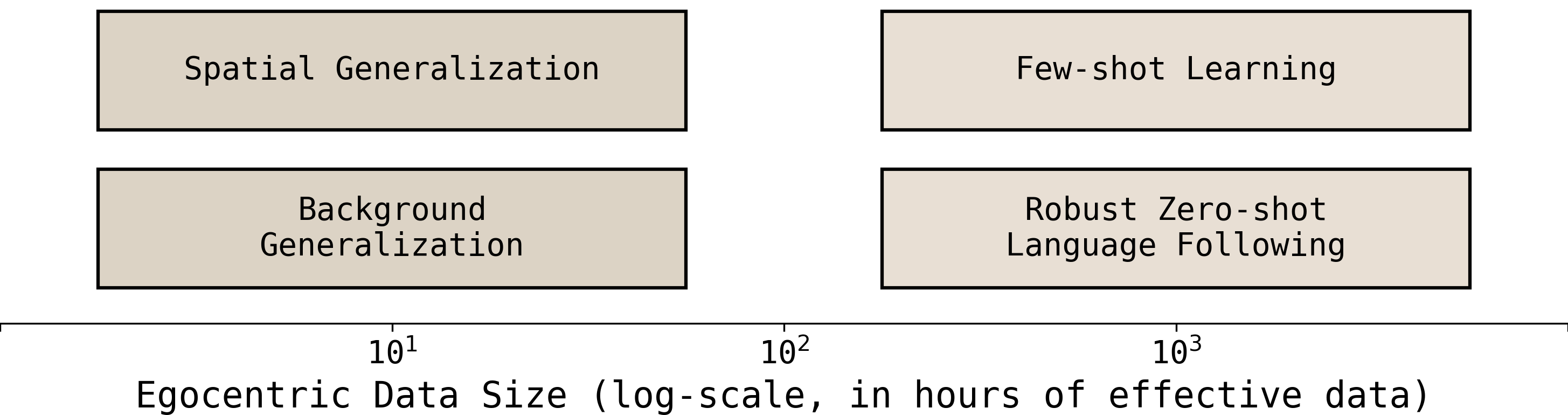

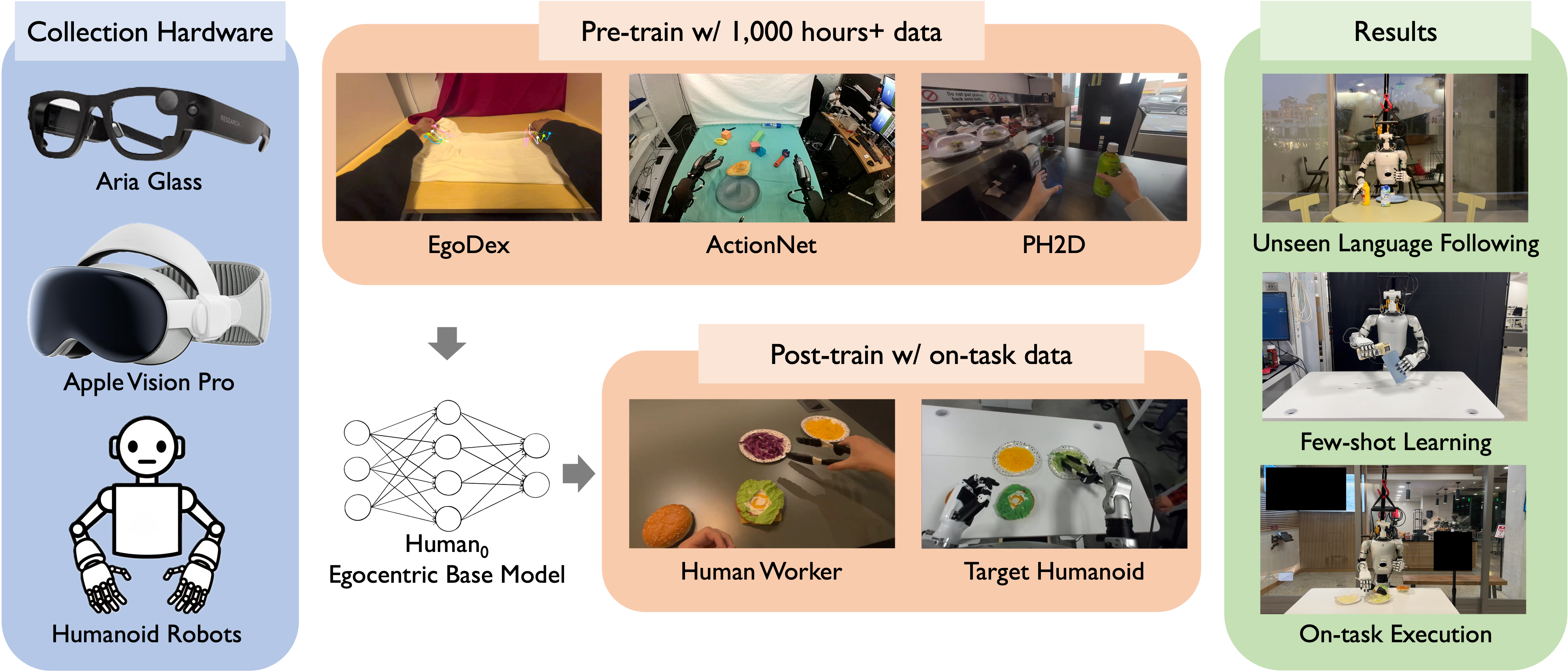

In-N-On is a training recipe that uses egocentric human data by splitting it into in-the-wild and on-task, enabling zero-shot language following, few-shot learning, and robustness through targeted on-task data.

The egocentric dataset PHSD contains over 20 hours of on-task data, collected on H1 and G1 humanoids as well as from Aria and Apple Vision Pro devices.

Aria Glasses

Apple Vision Pro

Seen language instruction

Unseen language instruction

Seen language instruction

Unseen language instruction

Seen language instruction

Unseen language instruction

Seen language instruction

Seen language instruction

Unseen language instruction

Unseen language instruction

Figure 1: Human0 leverages large-scale egocentric human-humanoid data from the PHSD dataset for pre-training and post-training, enabling strong instruction following on unseen tasks, few-shot execution, and improved on-task performance.

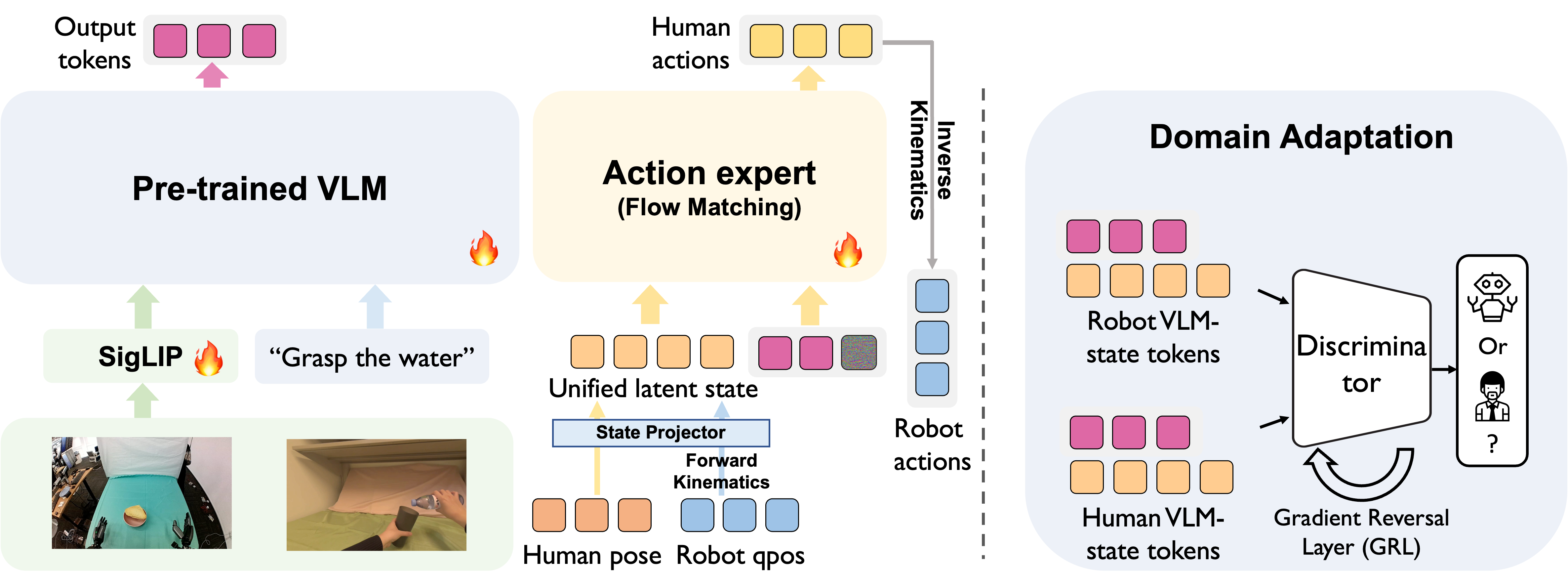

Figure 2: Our two-stage training pipeline pre-trains on large-scale in-the-wild human and robot data and post-trains on task-aligned demonstrations, using a domain-adversarial discriminator to learn embodiment-invariant representations for effective human-to-robot transfer.

@article{cai2025n,

title = {In-N-On: Scaling Egocentric Manipulation with in-the-wild and on-task Data},

author = {Cai, Xiongyi and Qiu, Ri-Zhao and Chen, Geng and Wei, Lai and Liu, Isabella

and Huang, Tianshu and Cheng, Xuxin and Wang, Xiaolong},

journal = {arXiv preprint arXiv:2511.15704},

year = {2025}

}